Counting Crows with Qwen

The other week while working in the back yard I noticed there were a surprising number of crows watching me from the neighboring yard. There were enough of them that I thought I should count them, but didn't because I knew they'd fly away before I'd finished. I took a picture instead and vowed that I'd ask the vision LLMs to tell me how many crows they could see. This task is interesting to me because it involves both object recognition and simple counting. I suspected that the models would easily recognize there were birds in the picture, given that the previous post found visual LLMs could identify people, places, and even inflatable mascots. However, I doubted that the models would be able to give me an accurate count, because they often have problems with math-related tasks.

I've gone over the picture and put red circles on all the crows I see (no, there aren't two by the pole. Zoom in on the bigger image to see its old wiring). My crow count is 25.

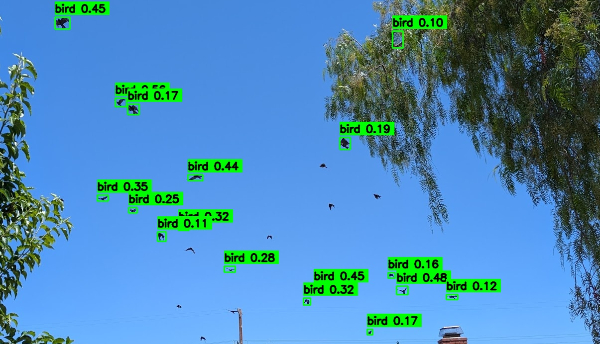

Traditional YOLO Struggled

Counting items in a picture is something that Computer Vision (CV) has done well for many years, so I started out by just running YOLO (You Only Look Once) as a baseline. I didn't want to spend much time working this out so I asked Gemini 2.5 Flash to generate some python code that uses a YOLO library to count the number of crows in an image. Interestingly, Gemini was smart enough to know that the stock YOLOv8 model's training data (COCO) didn't include a specific "crow" class, so it changed the search term to "bird". The initial code it generated ran fine, but didn't find any birds in my picture. I bumped up the model from nano to medium to extra large (x). The largest model only found one bird with the default settings, so I lowered the confidence threshold from 0.5 to 0.1. The change enabled YOLO to spot 17 out of 25 birds, though some of the lower-confidence boxes looked questionable.

Previously I've had success with YOLO-World when using uncommon objects because it is much more flexible with search terms. However, when I asked Gemini to change the code over to YOLO-World, the extra large model with 0.10 confidence only identified 10 birds. Looking at the code, the only significant difference was that the search terms had been expanded to include both bird and crow. Switching to crow alone only had 4 hits. YOLO-world took longer to run than plain YOLO (eg 8s instead of 4s) so it's disappointing that it provided worse results. To be fair though, a lot of the birds just look like black bundles of something floating around the sky.

Gemini 2.5 Answer

Before going into how well local vision LLMs could perform, I went to Gemini and asked 2.5 Flash how many crows were in the picture. It initially answered "20-25" birds, but when I asked for a specific number it increased the count to 30-35 crows. When I then asked it to count individual crows it said 34 individual crows. It sounded reasonable, so I asked it to put a bounding box around each one. As the below image shows, it added the boxes, but at the same time it also inserted a bunch of new crows into the image. This answer is pretty awful.

Switching to Gemini 2.5 Pro changed the number to 23 crows. However, asking it to place boxes around the crows resulted in crows being added to the picture. Additionally, the boxes contained multiple crows in each box.

Local Models

Next, I used Ollama to do some local testing of Qwen2.5-VL, Gemma3, and Llama3.2-vision on my test image. I tried a few different prompts to see if I could trick the models into giving better answers:

A. How many crows are in this picture?

B. Very carefully count the number of crows in this picture.

C. Very carefully count how many birds are in this picture.

A. B. C.

qwen2.5vl:7b 14 20 20

gemma3:12b-it-qat 14 19 17

llama3.2-vision:11b 3 2 0

Qwen and Gemma did ok in these tests, especially when I asked it to put a little more effort into the problem. Theorizing that the libraries might be downsizing the images and making the small crows even harder to recognized, I cropped the original test image and tried the prompts again (note: the cropped image is at the top of the page. The original image was zoomed out like the boxed image above). As seen below, the cropped image resulted in higher counts for both Qwen and Gemma.

A. How many crows are in this picture?

B. Very carefully count the number of crows in this picture.

C. Very carefully count how many birds are in this picture.

A. B. C.

qwen2.5vl:7b 20 25 25

qwen2.5vl:32b 24 30 24

gemma3:12b-it-qat 25 32 30

llama3.2-vision:11b 0 0 2

The frustrating part about working with counting questions is that you usually don't get any explanation into how the model came up with that number. I did notice that when I switched to the larger, 32B Qwen model, the LLM would sometimes give me some positional information (e.g., "1 crow near the top left corner", "2 crows near the center top", etc). However, the number of crows in the list was never the same as the number that it reported back as its final answer (e.g., 21 vs. 30). When I compared the answers with what I see in the picture, I think the locations are definitely swizzled (e.g., it should be 2 in the top left, none in the center).

Thoughts

So, not great but not terrible. I'm impressed that the home versions of Qwen and Gemma were able to come up with answers that were in the right ballpark. It is troubling though that the models generated believable-looking evidence for their answer that was just wrong. I'm more bothered though by the visual results where crows were added to better match the answer.

One saving feature for Qwen that I'll have to write about later though is that it is designed to be able to produce bounding boxes (or grounding) for detected objects. I've verified that these boxes are usually correct, or at least include multiple instances of the desired item. I feel like this should be an essential capability of any Visual LLM. We don't need any hallucinations, uh, around here.