2015-02-08 Sun

gis edison code

Lately I've been spending a good bit of my free time at home doing ad hoc analysis of airline data that I've scraped off of public websites. It's been an interesting hobby that's taught me a lot about geospatial data, the airline world, and how people go about analyzing tracks. However, working with scraped data can be frustrating: it's a pain to keep a scraper going and quite often the data you get doesn't contain everything you want. I've been thinking it'd be useful if I could find another source of airline data.

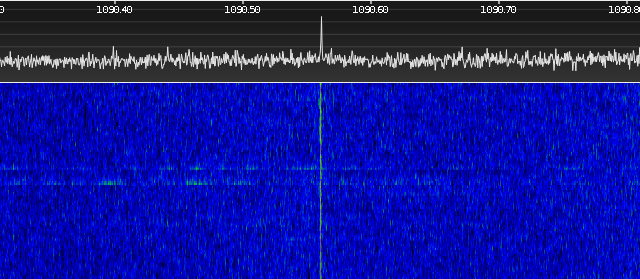

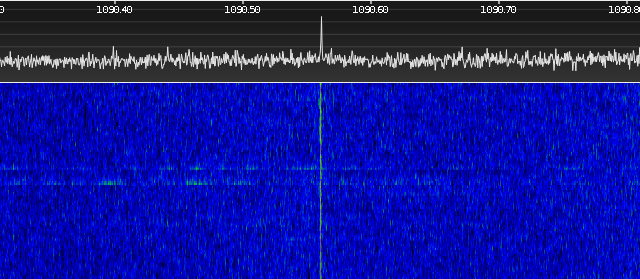

I started looking into how the flight tracking websites obtain their data and was surprised to learn that a lot of it comes from volunteers. These volunteers hook up a software-defined radio (SDR) to a computer, listen for airline position information broadcast over ADS-B, and then upload the data to aggregators like flightradar24. I've been looking for an excuse to tinker with SDR, so I went about setting up a low-cost data logger of my own that could grab airline location information with an SDR receiver and then store the observations for later analysis. Since I want to run the logger for long periods of time, I decided it'd be useful to setup a small, embedded board of some kind to do the work continuously in a low-power manner. This post summarizes how I went about making the data logger out of an Intel Edison board and an RTL-SDR dongle, using existing open-source software.

RTL-SDR and ADS-B

The first thing I needed to do for my data logger was find a cheap SDR that could plug into USB and work with Linux. Based on many people's recommendations, I bought an RTL-SDR USB dongle from Amazon that only cost $25 and came with a small antenna. The RTL-SDR dongle was originally built to decode European digital TV, but some clever developers realized that it could be adapted to serve as a flexible tuner for GNU Radio. If you look around on YouTube, you'll find plenty of how-to videos that explain how you can use an RTL-SDR and GNU Radio to decode a lot of different signals, including pager transmissions, weather satellite imagery, smart meter chirps, and even some parts of GSM. Of particular interest to me though was that others have already written high-quality ADS-B decoder programs that can be used to track airplanes.

ADS-B (Automatic Dependent Surveillance Broadcast) is a relatively new standard that the airline industry is beginning to use to help prevent collisions. Airplanes with ADS-B transmitters periodically broadcast a plane's vital information in a digital form. This information varies depending on the transmitter. On a commercial flight you often get the flight number, the tail fin, longitude, latitude, altitude, current speed, and direction. On private flights you often only see the tail fin. The standard isn't mandatory until 2020, but most commercial airlines seem to be using it.

If you have an RTL-SDR dongle, it's easy to get started with ADS-B. Salvatore Sanfilippo (of Redis fame) has an open source program called dump1090 that is written in C and does all of the decode work for you. The easiest way to get started is to run in interactive mode, which dumps a nice, running text display of all the different planes the program has seen within a certain time range. The program also has a handy network option that lets you query the state of the application through a socket. This option makes it easy to interface other programs to the decoder without having to link in any other libraries.

Intel Edison

The other piece of hardware I bought for this project was an Intel Edison, which is Intel's answer to the Raspberry Pi. Intel packaged a 32b Atom CPU, WiFi, flash, and memory into a board that's about the size of two quarters. While Edison is not as popular as the Pi, it does run 32b x86 code. As a lazy person, x86 compatibility is appealing because it means that I can test things on my desktop and then just move the executables/libraries over to the Edison without having to cross compile anything.

The small size of the Edison boards can make them difficult to interface with, so Intel offers a few carrier dev kits that break out the signals on the board to more practical forms. I bought the Edison Arduino board ($90 with the Edison module at Fry's), which provides two USB ports (one of which can be either a micro connector or the old clunky connector), a microSD slot for removable storage, a pad for Arduino shields, and a DC input/voltage regulator for a DC plug. It seems like the perfect board for doing low-power data collection.

Running dump1090 on the Edison

The first step in getting dump1090 to work on the Edison was compiling it as a 32b application on my desktop. This task took more effort than just adding the -m32 flag to the command line, as my Fedora 21 desktop was missing 32b libraries. I found I had to install the 32b versions of libusbx, libusbx-devel, rtl-sdr, and rtl-sdr-devel. Even after doing that, pkgconfig didn't seem to like things. I eventually wound up hardwiring all the lib directory paths in the Makefile.

The next step was transferring the executable and missing libraries to the Edison board. After some trial and error I found the only things I needed were dump1090 and the librtlsdr.so.0 shared library. I transferred these over with scp. I had to point LD_LIBRARY_PATH to pick up on the shared library, but otherwise dump1090 seemed to work pretty well.

Simple Logging with Perl

The next thing to do was write a simple Perl script that issued a request over a socket to the dump1090 program and then wrote the information to a text file stored on the sdcard. You could probably do this in a bash script with nc, but Perl seemed a little cleaner. The one obstacle I had to overcome was installing the Perl socket package on the Edison. Fortunately, I was able to find the package in an unofficial repo that other Edison developers are using.

#!/usr/bin/perl

use IO::Socket;

my $dir = "/media/sdcard";

my $sock;

do {

sleep 1;

$sock = new IO::Socket::INET( PeerAddr => 'localhost',

PeerPort => '30003',

Proto => 'tcp');

} while(!$sock);

$prvdate ="";

while(<$sock>){

chomp;

next if (!/^MSG,[13]/);

@x = split /,/;

($id, $d1, $t1, $d2, $t2) = ($x[4], $x[6], $x[7], $x[8], $x[9]);

($flt, $alt, $lat, $lon) = ($x[10], $x[11], $x[14], $x[15]);

my ($day,$month,$year,$min) = (localtime)[3,4,5,1];

my $date = sprintf '%02d%02d%02d', $year-100,$month,$day;

if($x[1]==1){

$line = "1\t$id\t$flt\t$d1\t$t1";

} else {

$line = "3\t$id\t$lat\t$lon\t$alt\t$d1\t$t1\t$d2\t$t2";

}

if($date ne $prv_date){

close $fh if($prv_date!="");

open($fh, '>>',"$dir/$date.txt") or die "Bad file io";

$fh->autoflush;

}

print $fh "$line\n";

$prv_date=$date;

}

Starting as a Service

The last step of the project was writing some init scripts so that the dump1090 program and the data capture script would run automatically when the board is turned on. Whether you like it or not, the default OS on the Edison uses systemd to control how services are launched on the board. I wound up using someone else's script as a template for my services. The first thing to do was to create the following cdu_dump1090.service script in /lib/systemd/system to get the system to start the dump1090 app. Note that systemd wants to be the one that sets your environment vars.

[Unit]

Description=dump1090 rtl service

After=network.target

[Service]

Environment=LD_LIBRARY_PATH=/home/root/rtl

ExecStart=/home/root/rtl/dump1090 --net --quiet

Environment=NODE_ENV=production

[Install]

WantedBy=multi-user.target

Next, I used the following cdu_store1090.service script to launch my Perl script. Even with a slight delay at start I was finding that the logger was sometimes starting up before the socket was ready and erroring out. Rather than mess with timing, I added the sleep/retry loop to the perl code.

[Unit]

Description=Store 1090 data to sdcard

After=cdu_dump1090.service

After=media-sdcard.mount

[Service]

ExecStartPre=sleep 2

ExecStart=/home/root/rtl/storeit.pl

Environment=NODE_ENV=production

[Install]

WantedBy=multi-user.target

In order to get systemd to use the changes at boot, I had to do the following:

systemctl daemon-reload

systemctl enable cdu_dump1090.service

systemctl enable cdu_store1090.service

It Works!

The end result of the project is that the system works- the Edison now boots up and automatically starts logging flight info to its microSD card. Having an embedded solution is handy for me, because I can plug it into an outlet somewhere and have it run automatically without worrying about how much power its using.

2015-02-20 Fri

gis planes bestof data

Not to sound crazy, but the Russians are watching me! Don't panic though- it's all been approved under an international treaty.

When I posted earlier about how I'd been collecting and analyzing airline data for fun, a friend of mine asked if military planes show up in the data. The answer turned out to be "not much", but while I was looking into it I started reading up on different ways governments fly military missions around the world for political reasons. For example, there were several stories in the news last year about how Russia has been sending long-range bombers into other countries' airspaces, presumably to test their air defenses or provoke a reaction. It would be interesting to look for these incursions in my data, but unfortunately they don't show up because the bombers are operating covertly, with their location beacons turned off.

Another story in the news last year involving military avionics was that the US and Russia had several heated exchanges over overt, surveillance missions that were to take place under the Open Skies treaty. The planes used in these missions do appear in public flight datasets. After reading through several websites, I found the tail fins for a few of these planes and learned that Russia just flew a mission over the US back in December. Not only did the plane show up in my data, it also flew over the city I live in!

Treaty on Open Skies

The Treaty on Open Skies allows the countries that have signed the treaty to fly unarmed surveillance flights over each other to promote better trust between governments. The treaty's origins can be traced back to Eisenhower, who proposed the Soviet Union and the US implement a mutual surveillance program for better military transparency. While the proposal was rejected, the idea resurfaced at the end of the cold war with support from many countries. The Treaty on Open Skies was signed by thirty-four state parties and went into effect in 2002. The treaty defines what kind of surveillance equipment can be used in the aerial missions, and specifies that the raw data captured during surveillance must be shared with all the other treaty members, if requested. Open Skies provides member countries with a better understanding of each other's capabilities, and makes it possible for all countries to participate, even if they do not have a sizable military budget.

Unfortunately, Open Skies also gets dragged into a number of political battles, as it permits a foreign country to come into your country and openly spy on you. Last year Russia threatened to block the US from flying a troop monitoring Open Skies mission over Ukraine. The surveillance missions were later allowed, but not before several congressmen in the US threatened to block Russian flights. While it's important to take steps to make sure the program remains mutually beneficial, it is clear that some politicians manipulate the facts about Open Skies to make it sound like its doing more harm than good.

A Russian Surveillance Plane Flies to the US (December 8th)

Looking through older news articles I learned that Russia had previously flown a modified Tu-154M-LK-1 plane with the ID RA-85655 during their Open Skies missions over the US. Wikimedia Commons has several user-submitted pictures of this plane, including the below from Frank Kovalchek (Creative Commons 2.0). The plane has "Y.A. Gagarin Cosmonaut Training Center" written on the sides (left in Russian, right in English).

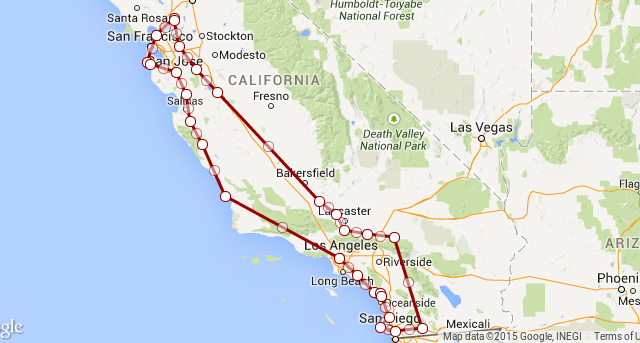

News stories reported that the plane was headed for Travis AFB, just north-east of the San Francisco Bay Area. A quick grep through the data I've been grabbing from FlightRadar24 revealed that RA-85655 traveled from Russia to the US on December 8th. Here's what the trip over the US looked like:

Surveillance of Southern California (December 10th)

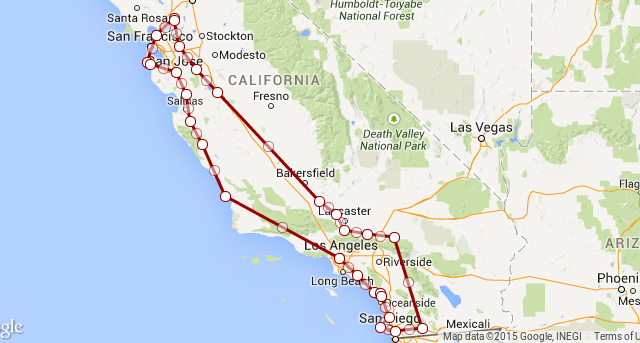

The best track I got for the plane was on December 10th as it made a loop through California. They covered a lot of ground in this sweep, visiting Travis AFB, San Francisco, San Jose, Vandenberg AFB, Longbeach, San Diego, and LA. It makes sense- Southern California is peppered with the defense industry and military bases. It's a little odd to me they flew to Half Moon Bay in Northern California. I would have thought they would cover more of the inner bay.

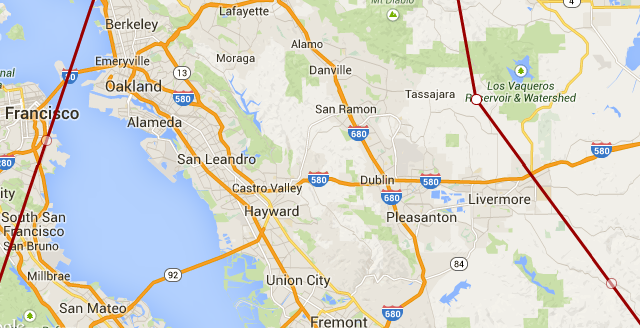

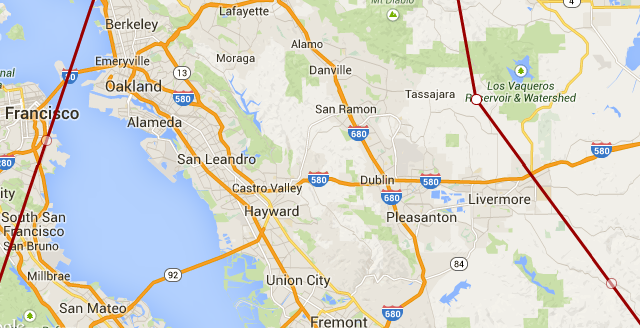

The part of this loop that's really interesting to me is that when they were close to finishing, they made a pass over Livermore (where I live). While we might have just been on the path back to Travis, it's quite possible that they wanted to take a look at NIF at LLNL. NIF is in the news because there's talk of them firing their lasers on plutonium in 2015. Maybe Russia wanted to get some "before" pictures, in case something goes horribly wrong. LLNL is the light brown block next to the 'e' in Livermore in the picture below. I believe it has a no-fly zone around it.

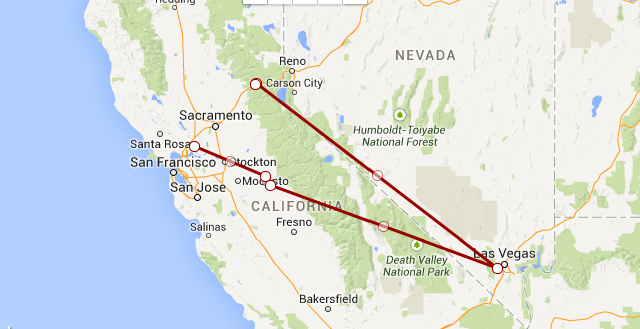

A Trip to Nevada (December 12th)

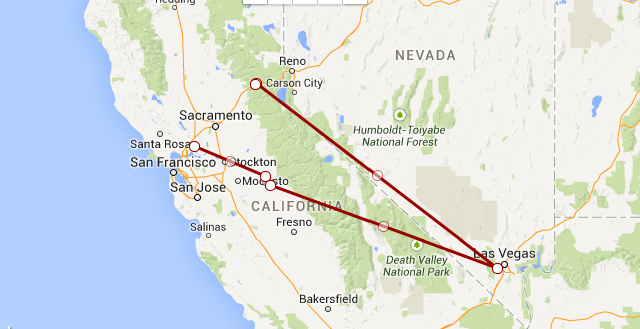

I didn't get any tracks for December 11th, but I did get a few points for the 12th. It looks like the plane made a trip out to Las Vegas. That grey area north west of Vegas is a big area of interest: it includes the Nevada Test Site, the Tonopah Test Range, and Area 51.

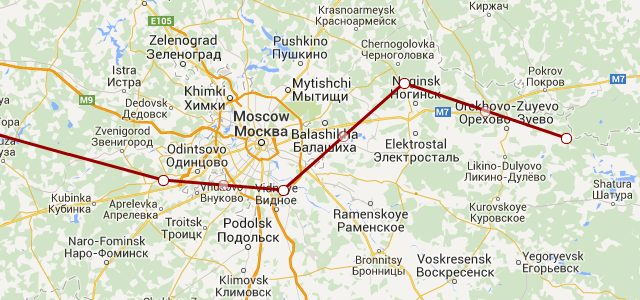

Returning to Russia (December 13th)

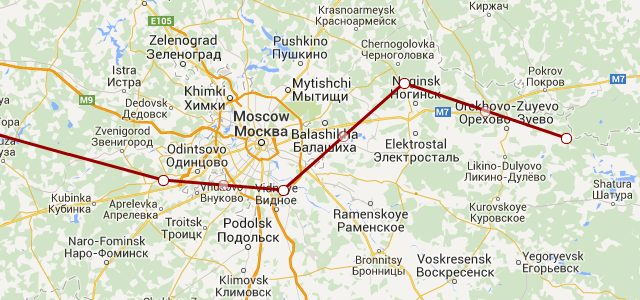

RA-85655 made the long journey back starting on December 13th (shown at the top of this post). I didn't get a landing point for the flight, but it did fly just south of Moscow before I lost it.

Data

Here is the data I used in this post, along with some simple instructions on how you can plot it yourself in a browser. For the record, this was all work I did in my spare time using data I collected from public sources (FlightRadar24).

2015-02-14 Sat

gis planes

Mind the gaps. Whenever I use a public website that provides airline information, I'm impressed with how much they know about flights that are in progress all around the world. However, there have been a number of times when I've started drilling down into the data only to find that the samples I want aren't there. Given that (I believe) a lot of the data actually comes from volunteers that monitor their own local regions with something like dump1090, it should be expected that there are gaps in coverage. That got me thinking: could I look at a day's worth of airline track data and estimate where there isn't coverage?

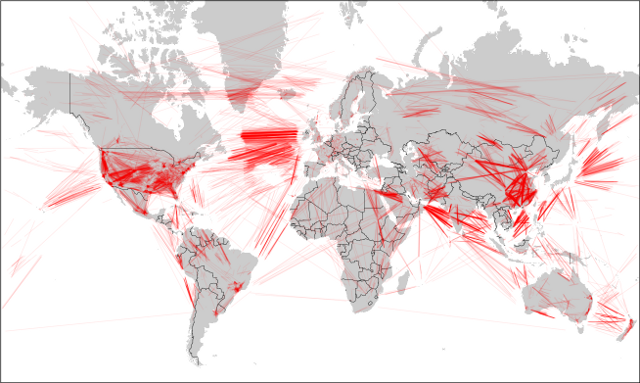

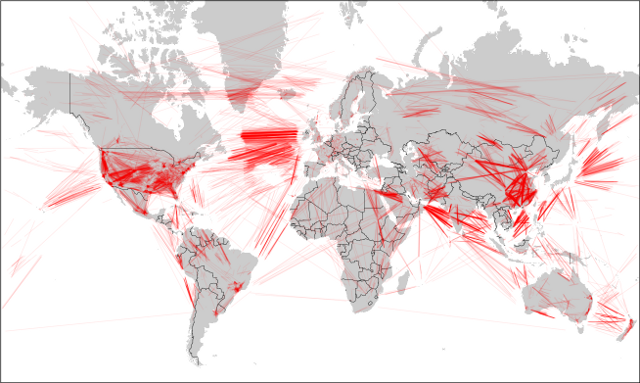

Earlier this week (while flying to Albuquerque!) I wrote a script to search for gaps in a collection of airline tracks. All this script does is walk through a track and inspect the amount of time between two sample data points. If the time for the segment is greater than a certain threshold, I estimate that the plane wasn't in a place where anyone could hear it and then plot the segment in red. Click on the pictures to get a closer view.

Again, missing data segments are in red (segments with data are not plotted, since they overwhelm the plots). As expected, a lot of gaps appear over the oceans, where nobody is listening. As FlightRadar24 has pointed out, coverage in different countries depends on the country's ADS-B policies and ground stations.

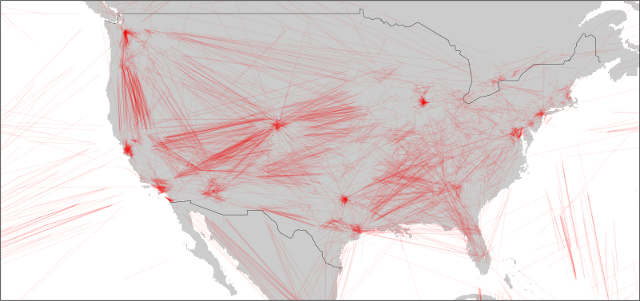

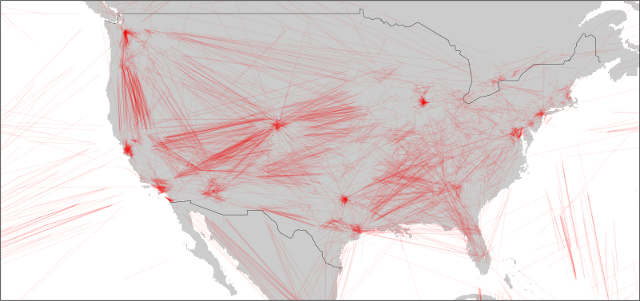

USA

I was a little surprised to see that the US has some dead space in the middle and south east. That might not be surprising as the FAA doesn't make the data available for free (afaik), and there are large unpopulated areas in the country.

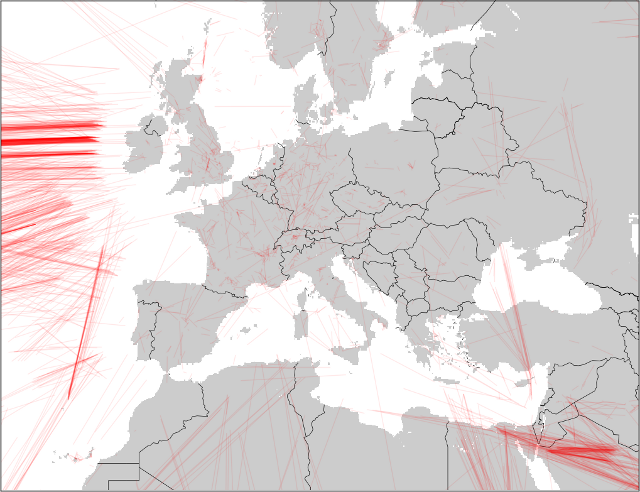

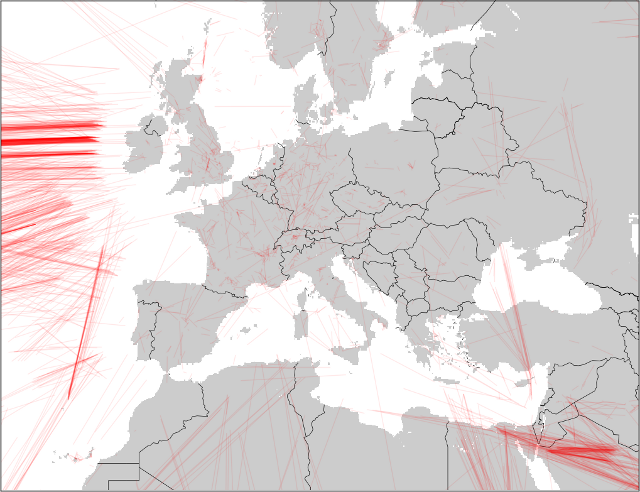

Europe

Europe seems to be well covered. I'm not sure if that's because people do a lot more tracking there, or if governments do the right thing and make the data available.

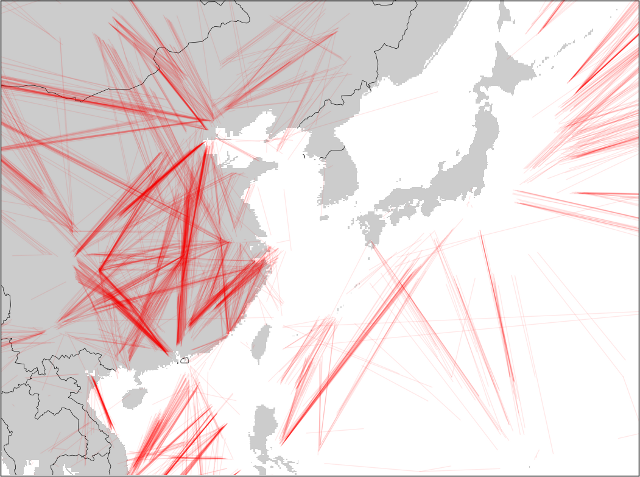

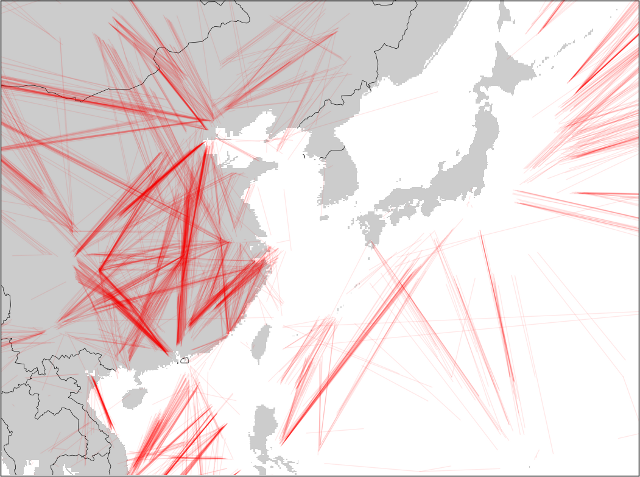

Western Pacific Rim

The Western Pacific Rim is interesting. Japan looks like it has excellent coverage. China must have coverage in the cities or on the coast, as there are a lot of flights with missing data on the interrior.

I'd been hoping there'd be something more interesting to look at around Korea. I've read that North Korea jams GPS from time to time and was hoping that I'd see a lot of gaps there. There weren't any stories of jamming for 2014 (afaik). Plus, I don't think there are many planes flying over NK to begin with.

Code

The gap_plotter.py plotter I threw together for this and a tiny sample dataset can be found here:

github:airline-plotters

This code just overlays line plots, so it's slow and breaks if you throw more than a day's worth of data at it. Some day I'd like to go back and build a propper analysis tool that grids and counts things.

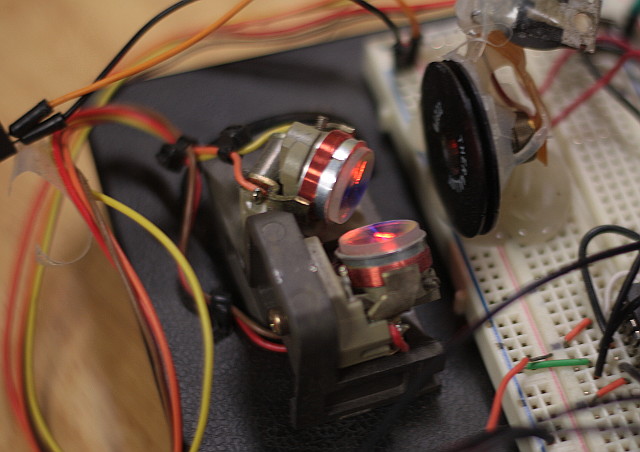

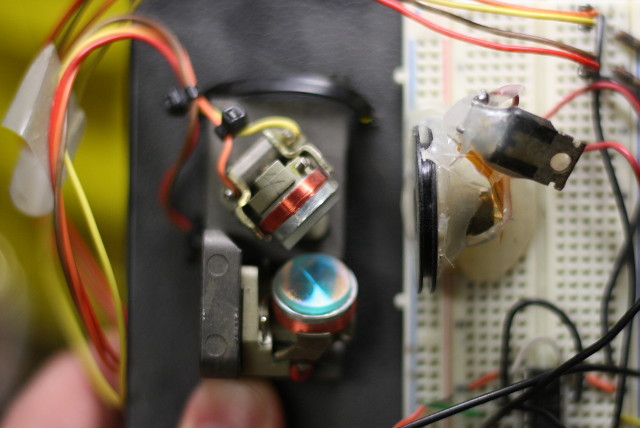

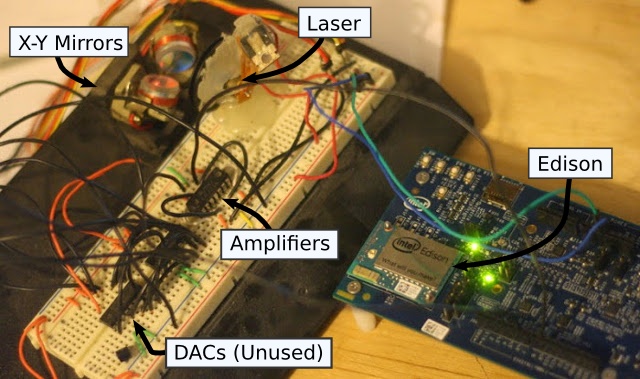

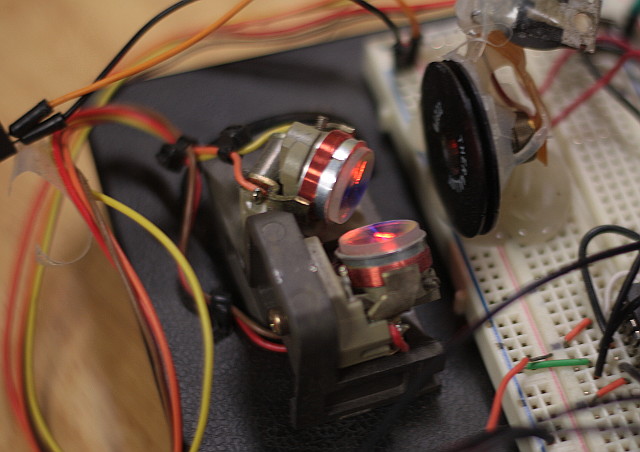

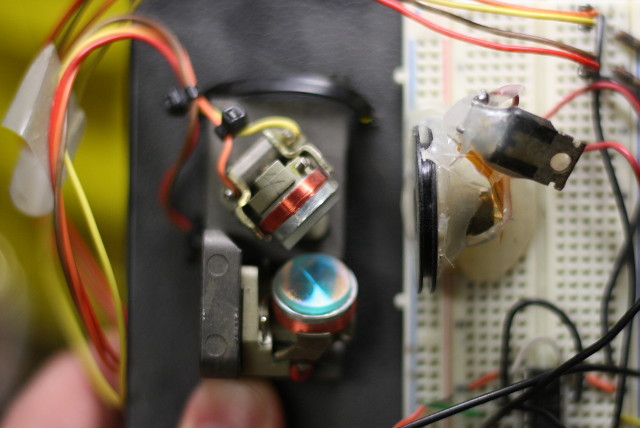

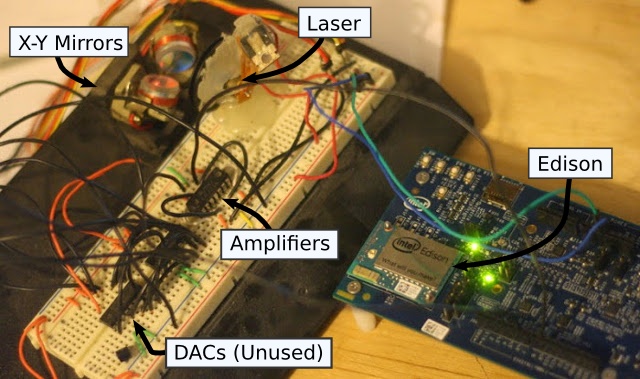

Tonight I used my Intel Edison to revive an old laser sketcher project I originally built for an "interfacing small computers" class nearly 20 years ago. This version is slow, crude, and low-res, but good enough to bring back some memories of previous work.

Interfacing Small Computers Class

Back in undergrad I took a class called "Interfacing Small Computers" that was all about connecting hardware to PCs of that era. It was a fun course that talked about a number of practical hardware/software design issues you had to deal with when developing cards that plugged into the PC's buses (AT, ISA, EISA, MCA,..). The labs were hard but fun- they used a special breakout board that routed all the signals from the ISA bus out to a large breadboard where you could connect in discrete logic chips. Students had to build circuits that decoded the bus's address signals, read/write the data bus, and do thing like trigger interrupts. On the host side we wrote dos drivers and simple applications in C to control the hardware. It was the most complicated breadboard work I've ever had to do (teaching me that designing a few 8-bit buses was a lot easier than wiring them up by hand).

In retrospect I was lucky to have taken the class at a time when you could still interface to a PC using a breadboard and TTL logic gates. Towards the end of the class we caught a glimpse of where I/O was heading- we started using Xilinx FPGAs to implement our bus handling logic. It wasn't long after this that PCI came out and ASICs/FPGAs became the only practical way to put your hardware on a bus.

Class Project

Towards the end of the quarter, my lab partner and I struggled to think of something we could build for the open-ended final lab project. In search of ideas, I talked to a friend of mine outside of school who had a knack for building interesting things. He said he'd just acquired an old, broken laser disc player that had some interesting parts I could have. In addition to having a bulky HeNe laser, the player had an X-Y mirror targeting system that was used to aim the laser at the right spot on the disc. The X-Y mirrors were a pretty clever design: all they did was place a mirror at the end of a slug in an inductor coil for each direction. The inductor moved the slug in or out of the coil depending on changes in current, so all you had to do was connect the coil to an analog output and change its voltage to position the mirror. The two mirror coils came in a single unit that were already oriented properly for X and Y reflections.

We went about building a simple board to feed the coils. The HeNe laser was too big (and had a dicey power supply), but luckily my friend also has a new, red laser diode I could use. We positioned it to hit the X-Y mirrors and fastened both to the board. Next, we used a pair of piggy-backed opamps to amplify the signals going to the inductors. Finally, we used a pair of 8-bit DACs to convert our digital data values to analog signals. We picked DACs that had built-in input registering. This meant that our ISA bus logic only had to generate signals to trigger the individual DACs to grab data off the ISA data bus when either the X or Y address appeared on the ISA address bus. Our design had to use a brand new Xilinx FPGA the lab had just received, so my parner ported the address decode logic to the FPGA.

I wrote some simple software for the host that continuously streamed coordinate data values to the mirror inductor coils (in retrospect, we should have buffered these in a buffer in the FPGA, but at the time, the FPGA was a big unknown). The mirror coilds probably weren't designed to run at high speeds, but we were able to stream data to them fast enough that we could render simple geometries like squares and circles. We got a lot of praise from others in the lab, as it was definitely a low-complexity, high-satisfaction project.

Reviving the Laser Sketcher with the Edison

After I finished the class, I didn't have a way to connect the sketcher to a computer because I didn't have an obvious way to stream data into the board. I looked at connecting it to a parallel port, but the whole thing got shelved because of time. After graduating, I thought about plugging the sketcher into an AVR embedded processor, but the AVRs didn't provide a way to change data vectors easily. The Intel Edison board lowered the effort bar so much that I didn't have an excuse to put this off anymore.

Connecting the laser sketcher up to the Edison was pretty easy. All I needed to do was find a way to supply analog X-Y signals to the amplifiers. The Arduino Edison board has a few pulse width modulation (PWM) pins that approximate an analog signal through pulse trains. The board had simple drivers for writing to the PWM generators, so all I had to do was create some data values and then stream them to the pins as fast as possible. The pulses make the output a little blocky, but are good enough for now. It'd probably be better to put a capacitor or opamp integrator w/ timed clearing in there to smooth the signal.

2014-11-14 Fri

tracks gis code

TL;DR: It isn't that hard to use third party sites like RunKeeper to load large amounts of fake health data into Virgin Health Miles. However, it's not worth doing so, because VHM only gives you minimal credit for each track (which is the same problem most legitimate exercise activities have in VHM). I've written a python program that helps you convert KML files into fake tracks that you can upload, if you're passive aggressive and don't like the idea of some corporation tracking where you go running.

A nice Jog on Three Mile Island

Well-Being Incentive Program

A few years back my employer scaled back our benefits, which resulted in higher health care fees and worse coverage for all employees. In consolation, they started a well being incentive program through Virgin Health Miles (VHM). It's an interesting idea because they encourage healthier behaviors by treating it as a game: an employee receives a pedometer and then earns points for walking 7k, 12k, or 20k steps in a day. The VHM website provides other ways to earn points and includes ways to compete head to head with your friends. At the end of the year, my employer looks at your total points and gives you a small amount of money in your health care spending account for each level you've completed. In theory this is a win-win for everyone. The company gets healthier employees that don't die in the office as much. Employees exercise more and get a little money for health care. VHM gets to sell their service to my employer and then gets tons of personal health information about my fellow employees (wait, what?).

As you'd expect, there are mixed feelings about the program. Many people participate, as it isn't hard to keep a pedometer in your pocket and it really does encourage you to do a little bit more. Others strongly resent the program, as it is invasive and has great potential for abuse (could my employer look at this info and use it against me, like in Gatica?). Others have called out the privacy issue.

Given the number of engineers at my work, a lot of people looked into finding ways to thwart the hardware. People poked at the usb port and monitored the data transfers upwards, but afaik, nobody got a successful upload of fake data. The most common hack people did was to just put their pedometer on something that jiggled a lot (like your kids). This was such a threat, that pedometer fraud made it into a story in the Lockeed Martin Integrity Minute (worth watching all three episodes, btw).

My Sob Story

This year I actively started doing VHM, using both a pedometer to log my steps and RunKeeper to log my bike rides. When my first few bike rides netted only 10 points, I discovered that a track of GPS coordinates did not constitute sufficient proof that I had actually exercised (!). In order to "make it real" I had to buy a Polar Heart Rate Monitor, which cost as much as the first two rewards my employer would give me in the health incentive program. I bought it, because it sounded like it would help me get points for other kinds of exercise, like my elliptical machine.

Unfortunately, RunKeeper estimates how much exercise you've done by using your GPS track to calculate your distance. Since my elliptical machine doesn't move, RunKeeper logs my heart rate data, but then reports zero calories, since I went nowhere. When I asked VHM if there was a way to count my elliptical time, they said all I could do was get 10 points for an untrusted log entry, or count the steps with my pedometer (potentially getting 60-100 points).

The pedometer was fine, but then midway through the year it died (not from going through the washing machine either). I called up VHM and they wanted $17 for a new one. I wrote my employer and asked for a spare and was told tough luck. $17 isn't much, but I'd already blown $75 on the HRM, and it all started feeling like a scam. Anything where your have to pay money to earn the right to make money just doesn't sound right, especially if its through your workplace.

Equivalent Stats

Outside of VHM, I've been keeping a log of all the different days I've exercised this year. I had the thought, wouldn't it be nice if I could upload that information in a way that would give me the points VHM would have awarded me if their interfaces weren't so terrible? What if I wrote something that could create data that looked like a run, but was actually just a proxy for my elliptical work?

I discovered that RunKeeper has a good interface for uploading data (since they just want you to exercise, after all), and that they accepted GPX and TCX formatted data files. I wrote a python script to generate a GPX track file that ran around a circle at a rate and duration that matched my elliptical runs. Since I knew VHM needed HR data, I then generated heart rate data for each point. Circles are kind of boring, so the next thing I did was add the ability to import a kml file of points and then turn it into a run. Thus, you can go to one of the many track generator map sites, drop a bunch of points, and create the route you want to run. My program uses the route as a template to make a running loop, and jiggles the data so it isn't exactly the same every time through. Fun.

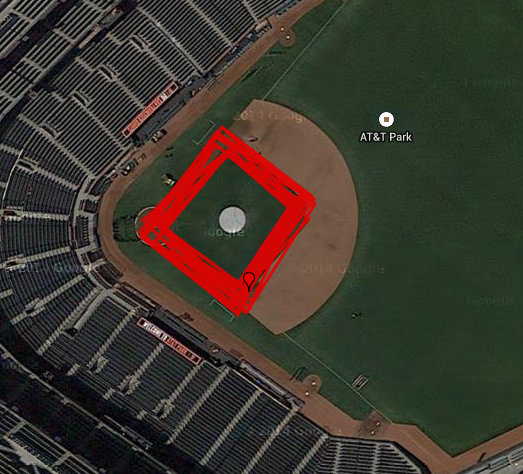

A Few Sample Runs

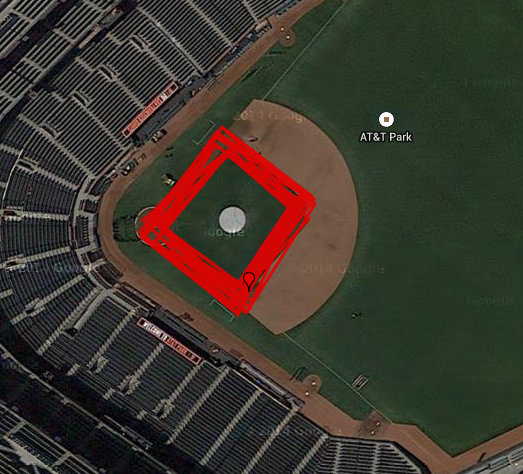

For fun, I made a few kml template files for runs that would be difficult for people to actually do. The first one at the top of this post was a figure eight around the cooling towers at three mile island. Next, since the Giants were in the world series, I decided it would be fitting to do some laps around AT&T park.

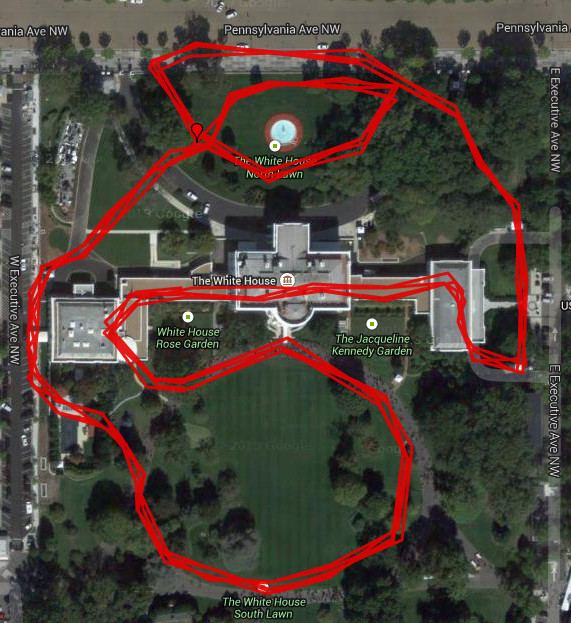

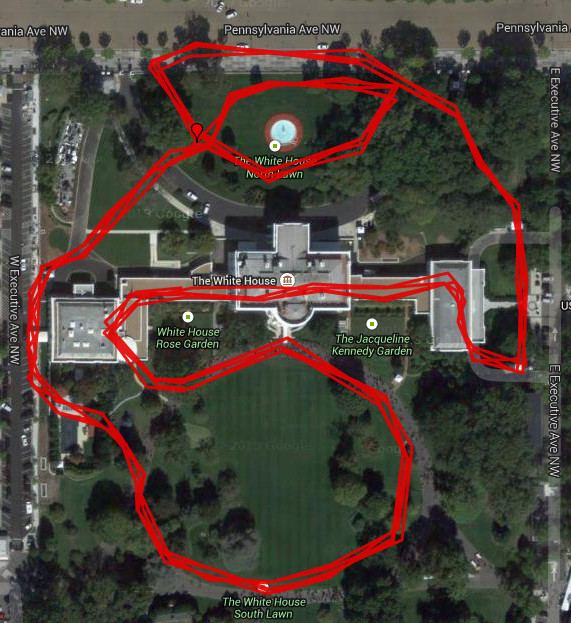

With all the stories in the news, I thought it would be fitting to squeeze in a few laps around the White House.

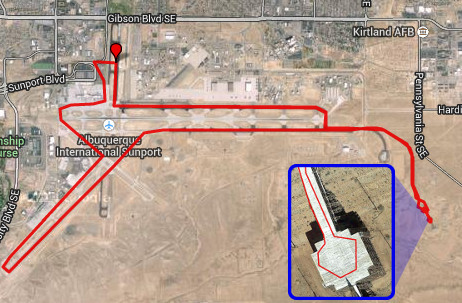

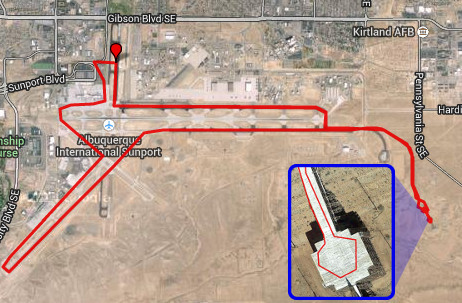

And last, I (in theory) made a break for it at Kirtland AFB and went out to see the old Atlas-I Trestle (a giant wooden platform they used to toll B52s out on and EMP dose them).

Mission Aborted

I decided to abort uploading all of my proxy data for two reasons. First, even with gps and heartbeat values, VHM still only assigns 10 points for each run. I was hoping to get "activity minute" credits, which would be on the order of 60 points per run, but alas, they must have some additional check to see whether the data came from your phone or another source. This problem really emphasizes why I dislike VHM: they only acknowledge data from a small number of devices that are meant to log certain types of exercises. If you want to do something else, you're out of luck. Second, VHM's user agreement says something about not submitting fraudulent data. While I wouldn't consider uploading proxy data to be any less ethical than what people with pedometers do at the end of the data to get to the next level, I don't want them coming after me because I was trying to compensate for their poorly-built, privacy-invading system.

If someone wanted to pick this up, I'd recommend looking at the data files RunKeeper generates in its apps. I've compared "valid" routes to my "invalid" ones, and I don't see any red flags why the invalid ones would be rejected upstream. I suspect RunKeeper passes on some info about where the data originated, which VHM uses to reject tracks that didn't come from the app.

Code

I've put my code up on GitHub for anyone that wants to generate their own fake data. It isn't too fancy, it just parses the kml file to get a list of coordinates, and then generates a track with lon/lat/time values to simulate a run based on the template. It uses Haversine to compute the distance between points so it can figure out how much time it takes to go between them at a certain speed.

github:rungen